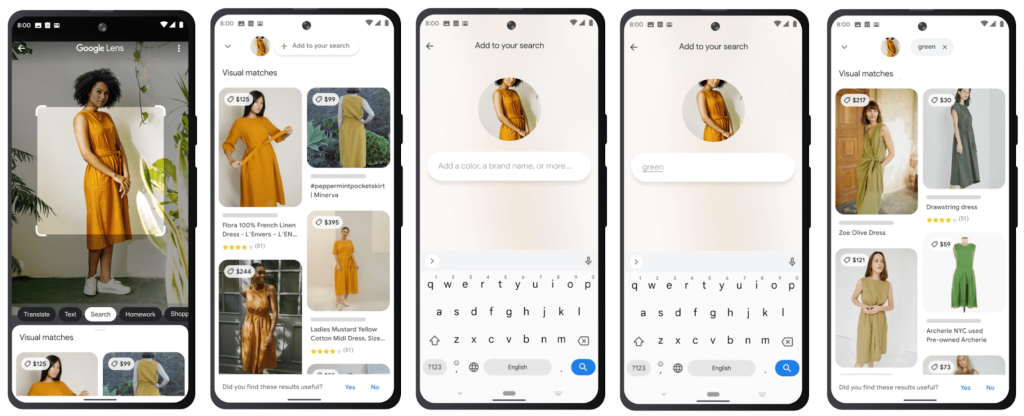

Enter a search term on Google? We’ve done that hundreds of times. Start a Google search with a picture? We do that more and more often, too. Now Google allows us to expand the image search with text and thus search in an even more targeted way. In the following, we will explain exactly how this works and why there is still a small catch to this super thing.

The laptop bag on the neighbouring table looks great … If only it were available in blue. Google’s new search function Multisearch makes this possible: simply open Google Lens, take a picture of the object you are looking for (e.g. the perfect laptop bag in the wrong colour) and add text (e.g. “blue”). The AI-based search spits out offers for the laptop bag or similar products in blue. Sounds too good to be true? It is in Germany, because Multisearch is only available in English so far. (But: we have found a small workaround for the German-language search, more on that below). In the course of the year, the function is to be offered worldwide in English and 70 languages are to be added in the next few months. Exact information, for example on a German rollout, is not yet available. If the English beta phase is successful, nothing should stand in the way of a German version.

Finding clothes in other colours is all well and good. But can Multisearch do more? Absolutely!

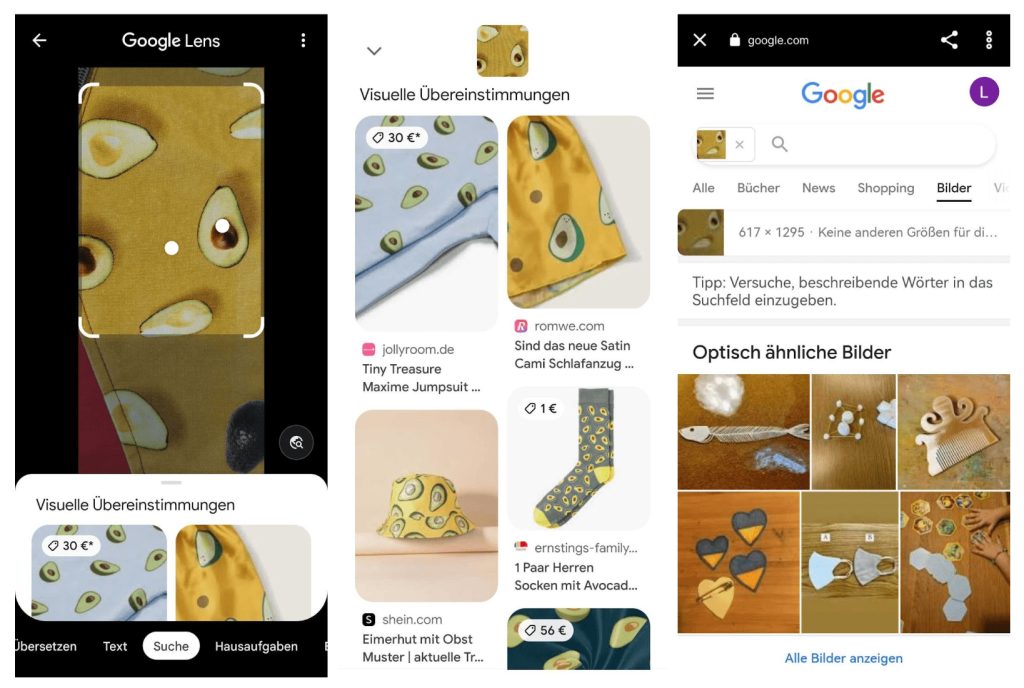

As promised, here is the workaround for those of you who (also) can’t wait: If you start the image search via Google Lens, you can jump to the Google search with the small search button at the bottom right. There, you can then add a search term to the image and get images that halfway match the photo and the term. However, no sophisticated AI seems to be used here, as our example shows very well:

While Google Lens clearly identifies the avocados in the picture as such, Google Search fails to do so. The search does not recognise any details, but rather abstracts the image. In other words: a yellow teapot can also be found in blue and a photo of a green background with the search term “tie” brings up green ties. However, the Google search does not find a bag with the avocado pattern photographed. As a stopgap, this workaround is an acceptable solution for simple searches, but we are still looking forward to the full functionality of Google Multisearch. So that we can finally find ties that match the pattern of our laptop bags!

As if the basic function of Google Multisearch wasn’t revolutionary enough, the search engine has gone one better: with a picture and the addition of “near me”, users will soon be able to find restaurants, shops and service providers in their vicinity with the new update in Google Lens. Remember the example of the cocktail whose recipe you can find with Multisearch without knowing the name of the drink? If you don’t feel like mixing the cocktail yourself, you can tag the picture with the search term “near me” and you will be shown bars near you that offer this cocktail. It works similarly with photos of food, clothes or garden shears. Searching has never been so easy. The function will be rolled out in the USA in late autumn and will hopefully reach Germany next year.

And what comes next? The recognition of entire environments instead of just individual images. Let’s take the example that Google itself uses in its blog as an illustration: With scene recognition in Google Multisearch, users can scan a shelf full of chocolate bars. Google Lens displays information about the different chocolates in real time (ratings, cocoa content, varieties, etc.). As with the already familiar Multisearch, we can refine our search (e.g. with the terms “dark” or “without nuts”). Scene recognition now highlights the panels on the shelf that match the search criteria.

Fading digital information into a real environment … Doesn’t that remind us of something? It certainly does!

The search function of the scene recognition sounds a lot like augmented reality applications. And these work best with corresponding wearables such as the AR glasses Google Glass (author’s note: these are not glasses, but a computer that is used only on one side. Therefore, the Google product is actually called Google Glass, not Google Glasses, which I also only discovered during the research for this article. The more you know …)

The product was not able to establish itself at the launch in 2014. The further development Glass Enterprise Edition 2 primarily serves companies in manufacturing and logistics as well as in the healthcare sector. Multisearch and scene recognition could make Google Glass a relevant factor in the wearables sector again and finally also interesting for B2C sales.

The good news for all SEO managers: Google Multisearch will not shake search engine optimisation to its foundations (*relieved sigh of relief in the WEVENTURE SEO team*).

In the Google Hangouts session on 29.04.2022, Google’s senior webmaster trends analyst John Müller made it clear that as an SEO manager:in there is nothing you can actively do to appear in Multisearch search results. If you do everything right and index images and content is findable and relevant, Google can lead users to the images in the corresponding search queries.

So we continue to do what we have always done: We use relevant images in meaningful places (preferably also in the body text), provide them with meaningful titles and alt texts and insert suitable keywords. Of course, we refrain from pointless, counterproductive keyword stuffing as well as from other blackhat SEO strategies.

However, our SEO managers see a small shortcoming: Currently, traffic via Multisearch cannot be tracked separately, but is assigned to the “Direct Traffic” channel. Unfortunately, this makes a clean and precise traffic analysis impossible … We hope that Google will correct this before the application is rolled out further.

In my eyes, Multisearch enables exactly what Google’s Senior Vice-President Prabhakar Raghavan describes as the goal of the application: We can search the way we do in real life. At least partially. We can combine visual impressions with concrete questions and thus get an actual relevant answer to our question.

All Google-typical data concerns aside, I am a fan of Multisearch. If the beta version is successfully tested and rolled out internationally, we can look forward to a “search revolution”: because search results will become real search experiences. In my opinion, Google rightly calls its Multisearch feature one of the “most significant updates to Google Search in several years”.

I am particularly interested in the possibilities that scene recognition will offer us. Who has never stood completely overwhelmed in front of a shelf in the DIY store and not known which glaze, screw or pipe clamp is the right one for the project at hand? Google could solve such problems in the future. Because then there will be ten people standing in the aisle pointing their smartphone camera at the screw shelf. But at least Google Lens can also boost customer satisfaction.

With Multisearch and the extensions (including local information and scene recognition), Google delivers a real innovation for Google Search. We are curious when Multisearch will be available in German and whether the function will also breathe new life into Google Glass.